Logstash is an open source data collection engine with real-time pipelining capabilities. Logstash can dynamically unify data from disparate sources and normalize the data into destinations of your choice. Cleanse and democratize all your data for diverse advanced downstream analytics and visualization use cases. (Source)

Event Hubs is a highly scalable data streaming platform capable of ingesting millions of events per second. Data sent to an Event Hub can be transformed and stored using any real-time analytics provider or batching/storage adapters. With the ability to provide publish-subscribe capabilities with low latency and at massive scale, Event Hubs serves as the “on ramp” for Big Data. (Source)

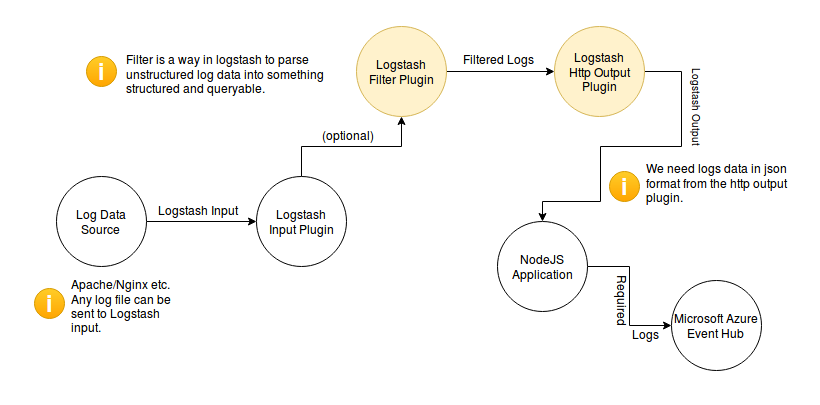

My main idea was to transfer the logs from Logstash to Microsoft Azure Event Hub. There are several available output plugins to ship the data from Logstash directly to several sources like, csv, boundary, circonus, cloudwatch and many more. There’s no available plugin to ship data directly to Azure’s Event Hub.

But, I found that there is an existing plugin which gives output to HTTP or HTTPS endpoint. So, I tried to use the logstash-output-http plugin to receive the events and then push the events to the Event Hub via Azure’s NodeJS API.

Now, you might think — why I didn’t wrote a native plugin because it’s the most easiest thing to use with Logstash. Well, I didn’t found any available Ruby API for Azure and Logstash plugins are written using Ruby. As we know that Logstash is written using jRuby and there’s an available Java API for the Hub. So, it is possible to use a jar injection in Ruby to design a native plugin.

You can find the source here. Just replace the Event Hub connection string, Event Hub path and desired http output port in config.js and move it to /etc/lemur/. Then execute the script by typing node transfer.js.

I am working on the native plugin for logstash which can push the data directly to the Azure Event Hub, but this might work as a temporary solution for now.